Deliver Clean and Safe Code for Your Python Applications

Introduction

Developers today shoulder great responsibility––not only are they solving some of the industry’s biggest challenges but they are expected to deliver those solutions with the highest code quality and security standards from the get-go.

The success story highlighted here demonstrates why static code analysis tools are essential in this effort to improve Code Quality and Code Security and how they have helped the Python community identify (and fix) bugs and vulnerabilities in some prominent open source Python projects.

Growing Technical Debt and Security Fears

With the rapid growth of Python applications spanning new and innovative use cases, there is mounting pressure on development teams to meet deadlines and deliver projects on schedule––oftentimes, leaving code reliability, maintainability, and security on the back burner. Doing so not only racks up long term technical debt but can also leave gaping security holes in code bases.

With the common objective to catch issues earlier in the development process (shift left approach), teams are often interested in new and advanced tools to see if they fit well in their workflow, are intuitive enough, and help them achieve this objective. Thankfully there are many tools and workflows that can assist Pythons users. Infact, the Python ecosystem is enriched with linting and static analysis tools (e.g. PyLint, Flake8, Bandit, PyT, PySa etc. to name a few) and most Python developers are already using one or more of these.

At SonarSource, we are intimately familiar with the needs (and pains) of the dev community. In fact we believe only developers can have a sustainable impact on Code Quality & Code Security. That is why we build open-source and commercial code analyzers––SonarLint, SonarCloud, SonarQube, with a mission to empower teams to solve coding issues within their existing workflows. In this mission, we shoulder a large responsibility as well, which means providing precise and actionable feedback, and finding issues with high precision (i.e.minimizing false-positives).

In the next section we will talk about how analyzing popular open source Python projects is not only an opportunity to provide great feedback to contributors, but also to help continually improve the quality and precision of static code analysis tools––a collaborative, community fuelled circle.

Bugs and Vulnerabilities Discovered in Popular Python Projects

We analyzed a few open-source projects tensorflow, numpy, salt, sentry and biopython with SonarCloud to identify any gaping holes. We know that these high visibility open source projects are often used by thousands of projects and that their development workflows include every best practice––code reviews, test coverage, usage of more than one tools (flake8, pylint, Bandit …). We analyzed the projects using SonarCloud and the results proved quite interesting. Needless to say, the issues were promptly acknowledged and fixed by those projects.

"Some of the things [SonarCloud] spots are impressive (probably driven by some introspection and/or type inference), not just the simple pattern matching that I am used to in most of the flake8 ecosystem."

--- Peter J. A. Cock - maintainer of BioPython (original post here)

Highlighted below are a few examples of projects analyzed and the issues that were missed by other tools but flagged by SonarCloud. As you read through those, it might be interesting to not only think about the tooling capability, but also consider it as a learning opportunity to proactively avoid similar pitfalls in the future.

Type errors

The example below shows the issue found on Tensorflow –– an open source platform for machine learning developed by Google.

SonarCloud has a type inference engine, which enables it to detect advanced type errors. It uses every bit of information it can find to deduce variable type, including Typeshed stubs, assignments, and your type annotations. At the same time, it won't complain if you don't use type annotations, and it's designed to avoid False Positives.

Here the control flow analysis is what allows it to understand that state_shape is a tuple because it is assigned output_shape[1:] when output_shape is a tuple. The algorithm is able to ignore the later list assignments to output_shape.

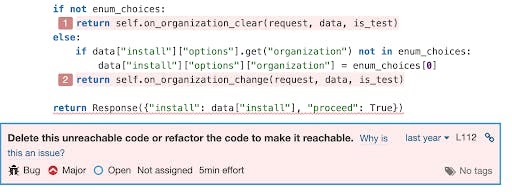

Unreachable code

The example below shows the issue found on Sentry –– a Python performance and error bottleneck monitoring library.

Detecting dead code is easy when it's just after a return or a raise statement. It's a little harder when the return is conditional. SonarCloud uses a control flow graph to detect cases where multiple branches exit just before reaching a statement.

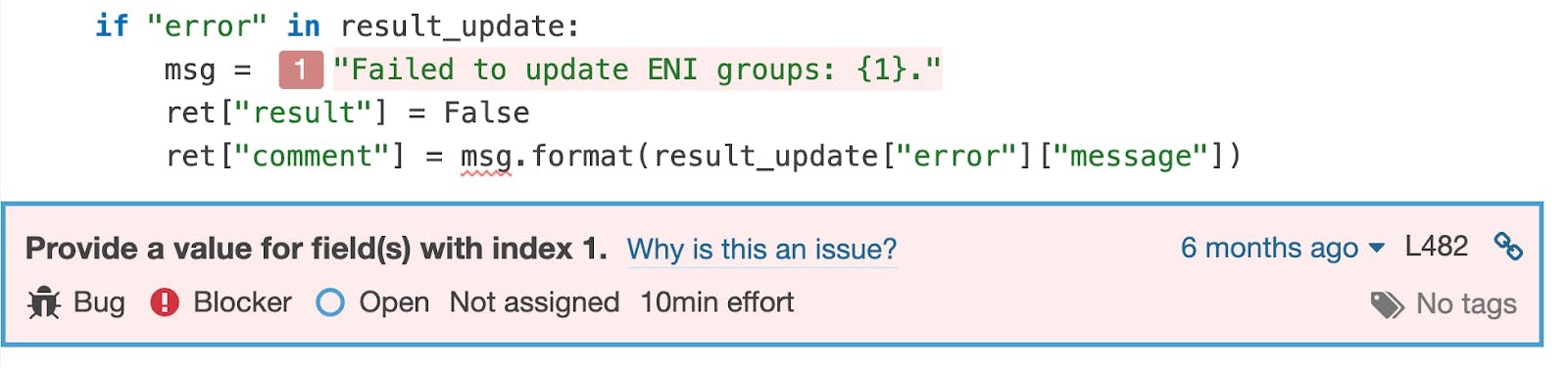

Wrong fields in formatted strings

The example below shows the issue found on the Salt Project –– a python package for event-driven automation, remote task execution, and config management.

It is quite common to reference the wrong field name or index during string formatting. Pylint and Flake8 have rules detecting this problem with string literals, but they miss bugs when the format string is in a variable.

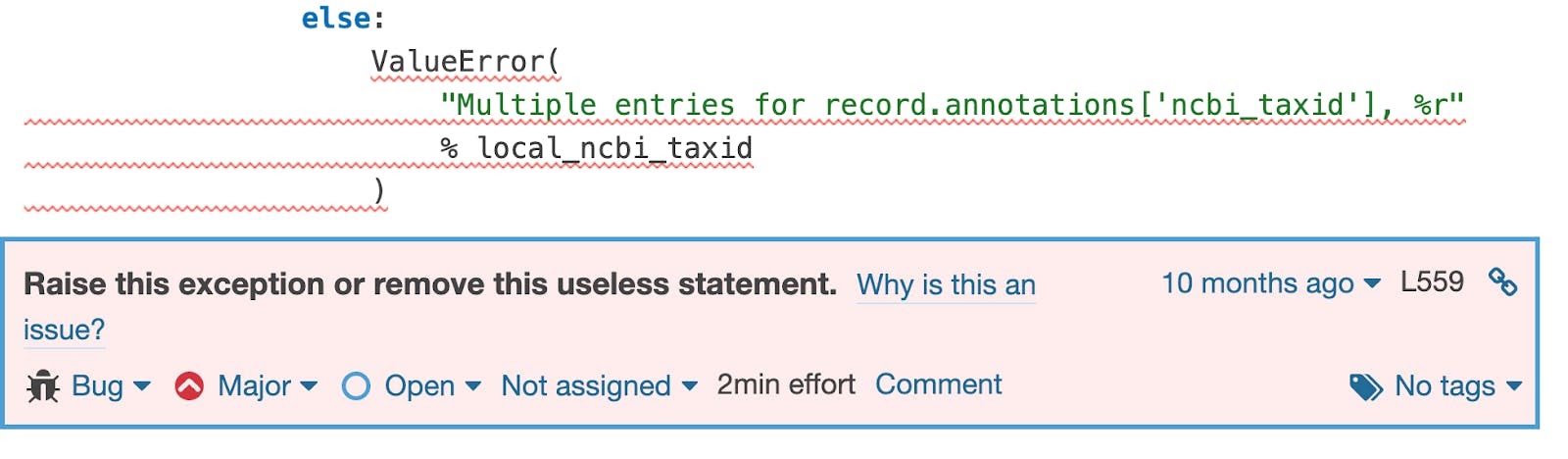

Unraised exceptions

The example below shows the issue found on Biopython –– a tool for biological computation and informatics

When we review code we usually look at classes, variables and other meaningful symbols and often forget to check little details, such as "is there a raise keyword before my exception". SonarCloud analyzes the whole project to extract type hierarchies. It detects when custom exceptions are discarded, not just the builtin ones.

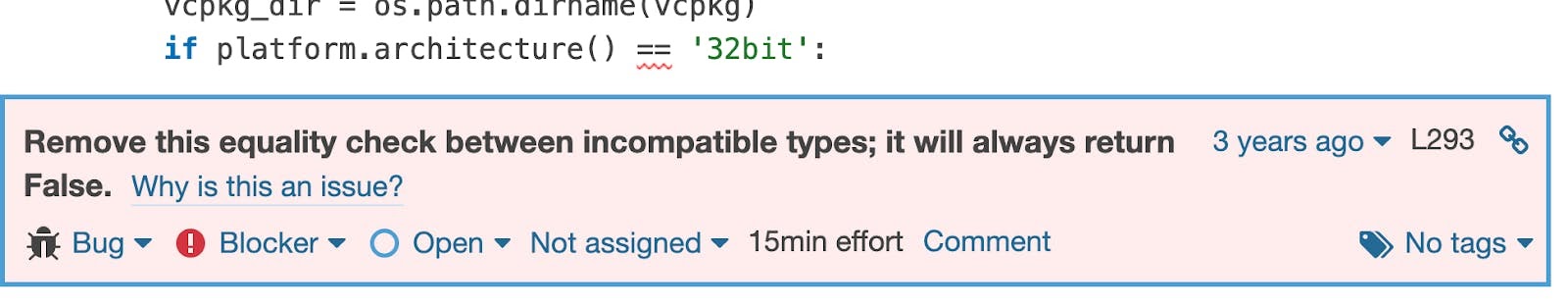

Comparisons that don't make sense

The example below shows the issue found on Numpy –– a numerical computing library for large multi-dimensional arrays and matrices.

SonarCloud has many rules detecting code which doesn't make sense. Comparing incompatible types with == will never fail, but it will always return False, or True if you use !=. Here we can see an issue because platform.architecture() returns a tuple.

These are just a few examples, and you can find all the issues that were flagged and fixed by the project maintainers on the individual github projects – tensorflow, numpy, salt, sentry, and biopython.

The Right Guidance at the Right Place and Time

Building and maintaining great software involves many teams using multiple tools, and it’s important to empower teams with tools that provide the right guidance at the right time and place.

SonarLint, SonarQube, and SonarCloud can assist Python developers to do their job well by:

- Automated code analysis starting from the IDE, through code review workflows, down to deployment pipelines.

- Detecting a broad range of Code Quality and Code Security issues earlier in the development cycle.

- Helping teams to ‘Clean as you Code’ so that new code delivered is of the highest quality and security standards and long term technical debt is reduced as a result.

To quickly detect issues as you write code in your IDE, SonarLint (open source and completely free) is available from the IDE marketplace of choice www.sonarlint.org/

SonarQube and SonarCloud are on-prem and cloud solutions, respectively, that help projects detect bugs, vulnerabilities, code smells, and security hotspots in your code repositories platforms (such as GitLab, GitHub, Azure DevOps, or Bitbucket) allowing teams only merge high quality and secure code. They are completely free for any open source projects. You can give it a try at www.sonarqube.com or www.sonarcloud.com

If you’re curious about what bugs or vulnerabilities may hide in your Python code repository, then just go ahead and analyze your project like we’ve done it in these previous examples. It’s a great opportunity to strengthen your dev pipeline and catch issues earlier. In that process, you may also find out it’s a nice learning opportunity and discover best-practices and/or dangerous patterns you may not be aware of.